IntroductionWe are excited to announce support for Convergent Science products on the HPCBOX™, HPC Cloud Platform. This article details the capabilities and the benefits provided by the HPCBOX Platform for CONVERGE users. CONVERGECONVERGE is a cutting-edge CFD software package that eliminates all user meshing time through fully autonomous meshing. CONVERGE automatically generates a perfectly orthogonal, structured grid at runtime based on simple, user-defined grid control parameters. Thanks to its fully coupled automated meshing and its Adaptive Mesh Refinement (AMR) technology, CONVERGE can easily analyze complex geometries, including those with moving boundaries. Moreover, CONVERGE contains an efficient detailed chemistry solver, an extensive set of physical submodels, a genetic algorithm optimization module, and fully automated parallelization. HPCBOXHPCBOX delivers an innovative desktop-centric workflow enabled platform for users who want to accelerate innovation using cutting edge technologies that can benefit from High Performance Computing Infrastructure. It aims to make supercomputing technology accessible and easier for scientists, engineers and other innovators who do not have access to in-house datacenters or skills to create, manage and maintain complex HPC infrastructure. To achieve a high level of simplicity in delivering a productive HPC platform, HPCBOX leverages unique technology that not only allows users to create workflows combining their applications and optimization of underlying infrastructure into the same pipeline, but also provides integration points to help third-party applications directly hook into its infrastructure optimization capability. Some of the unique capabilities of HPCBOX are:

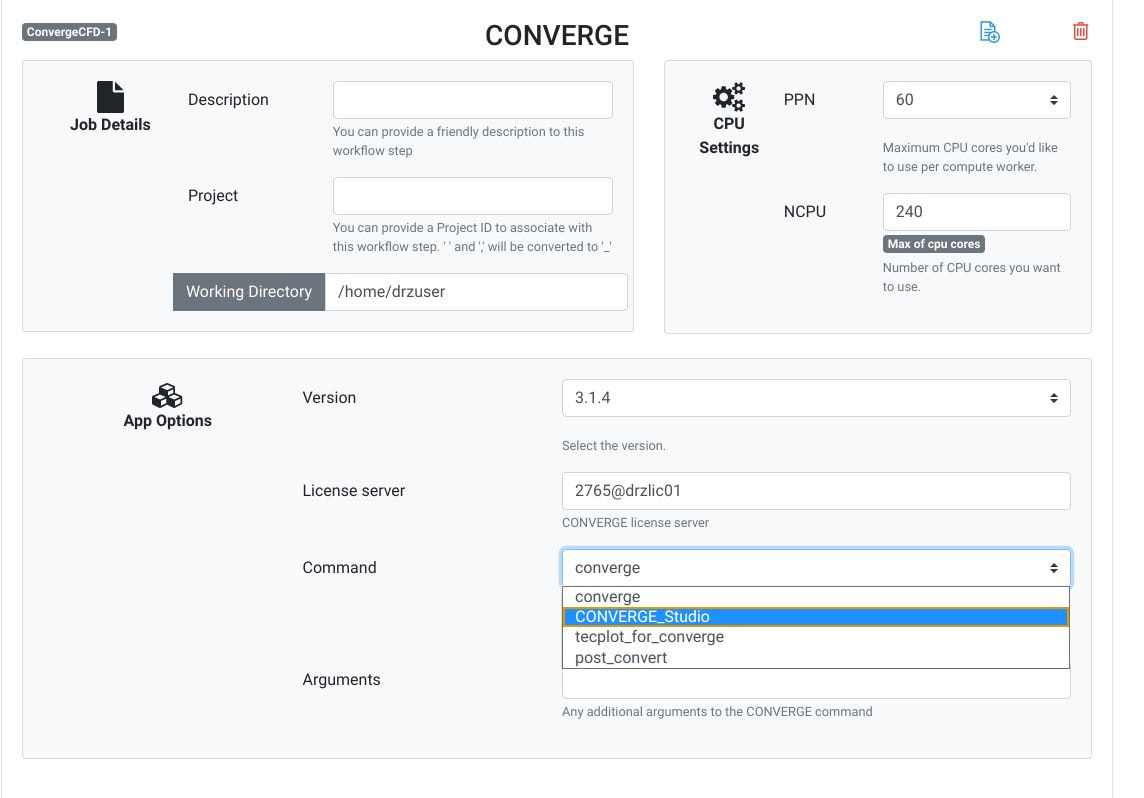

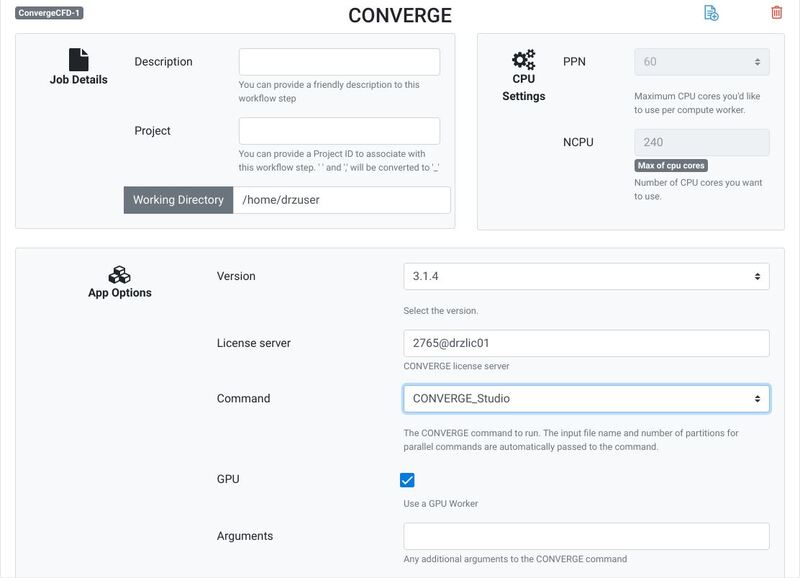

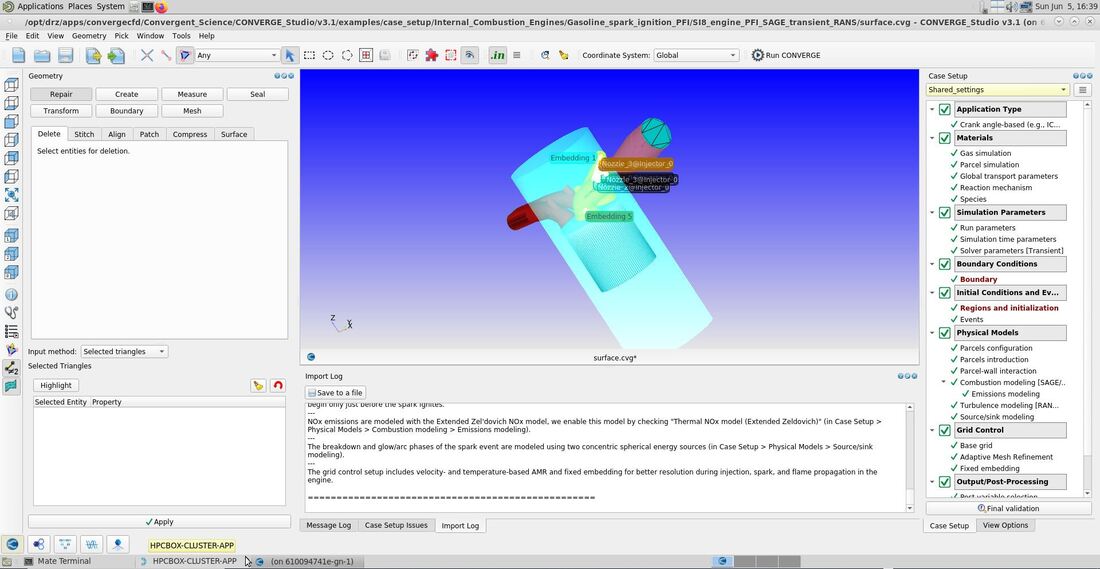

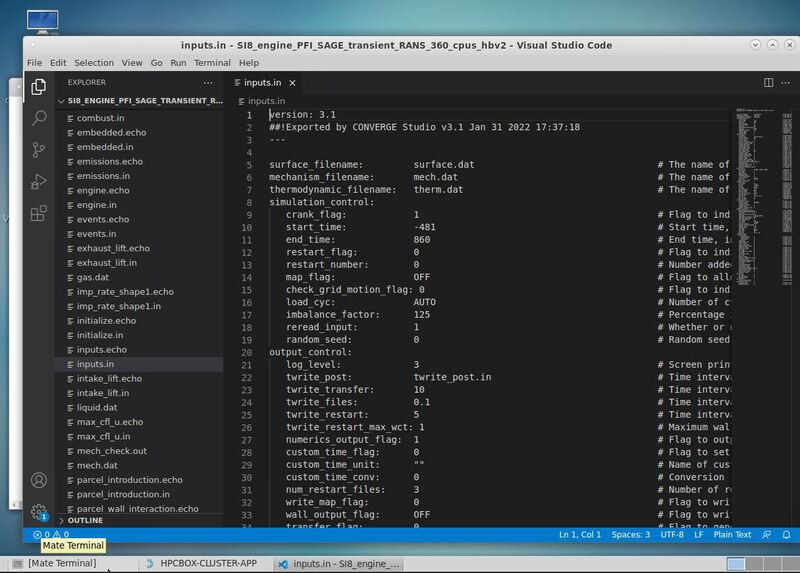

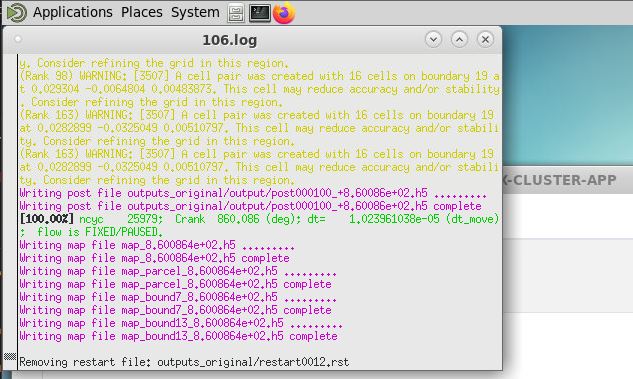

CONVERGE support in HPCBOXHPCBOX provides end to end support for the entire user workflow for CONVERGE with its fully interactive user experience capabilities and empowers CONVERGE users with the possibility to perform their entire pre/solve and post processing directly on an HPCBOX cluster. HPCBOX clusters for CONVERGE are optimized for delivering the best possible performance available on the cloud, utilizing Microsoft Azure’s Milan-X based HBv3 instance types for solving and NV instances with OpenGL capable NVIDIA graphics cards for optimal user experience when using CONVERGE Studio and Tecplot for CONVERGE. HPCBOX also sets up the most suitable MPI and networking configuration, specifically HPC-X for delivering the best possible performance when users are executing large scale models which span across multiple compute nodes which are interconnected with Azure’s state-of-art InfiniBand HDR network. Using CONVERGE on HPCBOXUsers can create application pipelines seamlessly by using HPCBOX’s CONVERGE application. HPCBOX automatically proposes the most suitable configuration for every step in the workflow. For example, selecting CONVERGE_Studio will automatically trigger use of a graphics node which is powered by an OpenGL capable NVIDIA graphics card. Furthermore, depending on the model, users have an option to set the number of MPI tasks on each compute node involved in the computation. This allows for optimal configuration with the required amount of memory for each cpu core involved in the MPI communication. With the fully interactive user experience delivered by HPCBOX, CONVERGE users can easily edit their input files directly on the cluster and work the same way as they would on their local workstations or laptops. The entire HPC optimization is handled automatically by HPCBOX reducing the learning curve for users who don’t have much experience with HPC clusters. Users will also be able to track the progress of their simulation in real time within the HPCBOX environment. Additionally, using the connect/disconnect feature of HPCBOX, users can start a simulation on the cluster from one location, and connect from anywhere else to follow up on it. Convergent Science support personnel will be able to connect to the user’s desktop on the HPCBOX cluster and provide real time support when required. This eliminates the need to transfer model files back and forth between the user and the support team and improve the support experience. Get StartedTo get started with using HPC on Azure for your Convergent Science products get in touch with HPCBOX support to discuss further. Please use this Contact Form to get in touch! About Convergent ScienceConvergent Science (www.convergecfd.com) is an innovative, rapidly expanding computational fluid dynamics (CFD) company. Our flagship product, CONVERGE CFD software, eliminates the grid generation bottleneck from the simulation process. Founded in 1997 by graduate students at the University of Wisconsin-Madison, Convergent Science was a CFD consulting company in its early years. In 2008, the first CONVERGE licenses were sold and the company transitioned to a CFD software company. Convergent Science remains headquartered in Madison, Wisconsin, with offices in the United States, Europe, and India and distributors around the globe. About Drizti Inc.Toronto, Canada based Drizti (www.drizti.com) delivers Personal Supercomputing. We believe, access, and use of a supercomputer should be as straightforward as using a personal computer. We’ve set out to upgrade the supercomputing experience for users with our expertise of High Performance Computing(HPC), High Throughput Computing, distributed system architecture and HPC cloud design. We aim to make scalable computing accessible to every innovator, helping them compete and innovate with the same freedom they enjoy on their personal workstations and laptops. Drizti’s HPCBOX platform delivers the same rich desktop experience they are used to when building complex products and applications locally and empowers them with workflow capabilities to streamline their application pipelines and automatically optimizes the underlying HPC infrastructure for every step in the workflow.  Author Dev S. Founder and CTO, Drizti Inc

This post is short since it's an update to a previous post which can be found here.

Early Holiday Gift

On November 8th, 2021, Microsoft delivered an early Holiday Gift by announcing the preview availability of an upgraded version of the Azure HBv3 virtual machines. This upgraded instance was enhanced by 3rd Gen AMD EPYC™ processors with AMD 3D V-cache, codenamed “Milan-X”.

Being a Microsoft Partner, and always staying at the forefront of HPC in the cloud, delivering one of the easiest to use and scale HPC cloud platforms, HPCBOX, we at Drizti, had to get our hands on this new hardware and make sure our HPCBOX platform was able to support it immediately on GA. So, obviously, we signed up for the private preview and Microsoft was kind enough to get us access around late November/early December. Technical Specification

I won't be going into much detail of the technical specification for the upgraded SKU since a lot of information and initial benchmark results have been provided on this Azure Blog and this Microsoft Tech Community article. In short, this upgraded SKU has the same InfiniBand capability as the original HBv3 i.e., HDR, same amount of 448GB memory and local scratch nVME. However, the main change is the switch to AMD EPYC™ processors with AMD 3D V-cache, codenamed “Milan-X” which offers a significant boost to L3 cache and brings it to around 1.5 gigabytes on a dual socket HBv3 instance.

Tests and Amazing Results

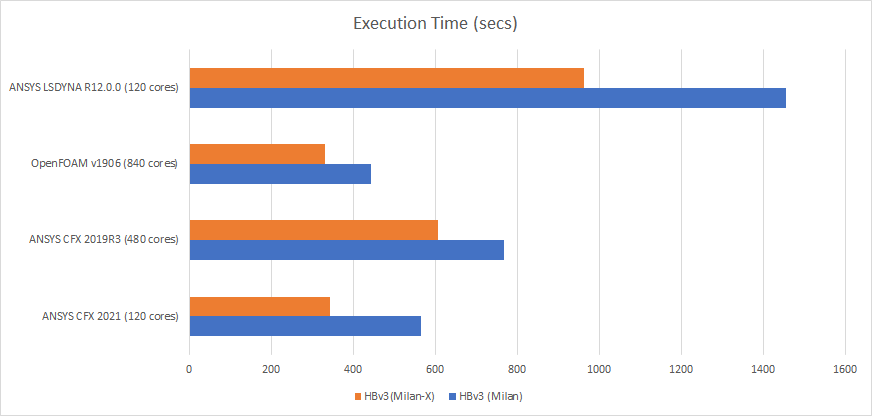

We performed a few tests to make sure that the HPCBOX platform was ready to support this upgraded SKU once it was released. We highlight the tests and the execution time speedup we noticed for them. I am not including case names and details here because some of them are customer model files. However, if you would like to get more details feel free to contact us.

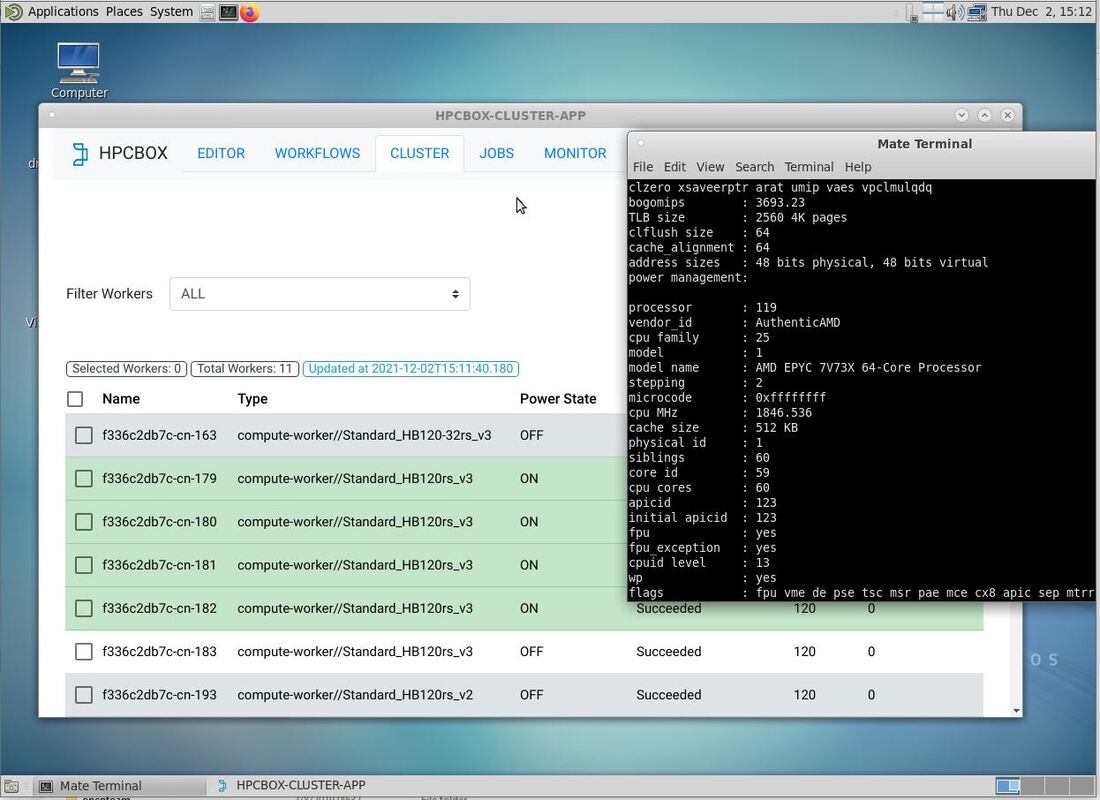

In the above screenshot, you can see 4 powered-on HBv3 compute workers on an HPCBOX cluster. These HBv3 instances are the upgraded version of the SKU and on the right you can see the CPU information.

The tests were run on an HPCBOX cluster using CentOS 7.X as the base image.

In the chart and table above, we can clearly see a good improvement in performance using Milan-X. The larger cache size seems to give a good boost for the applications we tested and it appears that this boost will most likely be experienced with other workloads as well.

Availability in HPCBOX

Being at the forefront of HPC in the cloud, specifically on Microsoft Azure, Drizti is ready for the GA release of the upgraded HBv3 instances and we will be continuing our tests and providing feedback to Microsoft. We have a few more tests to conduct and also to make sure our AutoScaler is able to perform as expected with the Milan-X instances. It appears that the new HBv3 will be a drop in replacement in HPCBOX and most likely will just work out of the box with all the functionality delivered by HPCBOX.

Conclusion

Microsoft Azure has been the leader in delivering cutting-edge infrastructure for HPC in the cloud and we are privileged to have been partnering with the Azure HPC team. We had the opportunity of not only being a launch part for the original HBv3 "Milan" instances, but now, also be one of the early partners to test compatibility of these upgraded SKUs with Milan-X. AMD EPYC "Milan" was a big upgrade for HPC and with Milan-X, it appears that AMD has advanced further and delivered another cutting-edge CPU with amazing performance. Drizti, with our HPCBOX platform on Microsoft Azure is able to deliver a fully interactive Supercomputing experience for our users and it's very exciting that we can upgrade our end users and accelerate their innovation in minutes by just upgrading their compute nodes! Contact us to learn how you can get rid of in-house hardware and/or your existing cloud presence and get a seamless HPC upgrade to HPCBOX on Azure.

Author

Dev S. Founder and CTO, Drizti Inc All third-party product and company names are trademarks or registered trademarks of their respective holders. Use of them does not imply any affiliation or endorsement by them.

We are pleased to announce preview support in HPCBOX for NVIDIA A-100 powered NDv4 instances on Microsoft Azure, specifically Standard_ND96asr_v4. As per Microsoft’s documentation, the ND A100 v4-series uses 8 NVIDIA A100 TensorCore GPUs, each with a 200 Gigabit Mellanox InfiniBand HDR connection and 40 GB of GPU memory.

Introduction

This blog post will be the first in what will hopefully be a two-part series with more information to share later. But, for now, using these machines was so exciting that an initial post was well worth it.

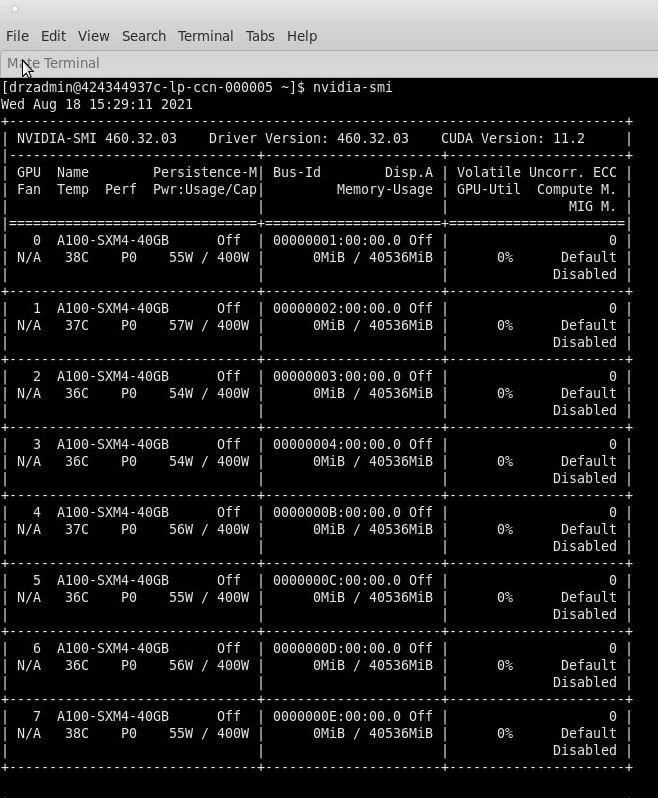

Drizti’s HPCBOX platform delivers a fully interactive turn key HPC solution targeting end-users directly and comes with expert HPC support offered by Drizti. This gives end-users a single point of contact and a fully integrated solution which has already been optimized or can be optimized by our HPC experts in collaboration with users for custom codes which are developed in-house. The NDv4 instances are cutting edge and Microsoft Azure is probably the only public cloud vendor to offer this kind of a machine configuration for applications that can effectively use multiple GPUs. The screenshot below shows the output of nvidia-smi on one of these instances. Not one but many for extreme scale

At Drizti, we like to make sure we can offer a truly super scale computing setup with a fully interactive Personal Supercomputing experience. We want our users to be able to use supercomputers in the same way as they use their PCs or workstations and eliminate the learning curve and time wasted waiting for efficient use of supercomputing technology. Therefore, we always try to challenge HPCBOX and our HPC capabilities, and in the case of NDv4, we used not one but multiple instances to use them at a massive scale and exercise all the GPUs and InfiniBand links at max throughput. It was an amazing experience.

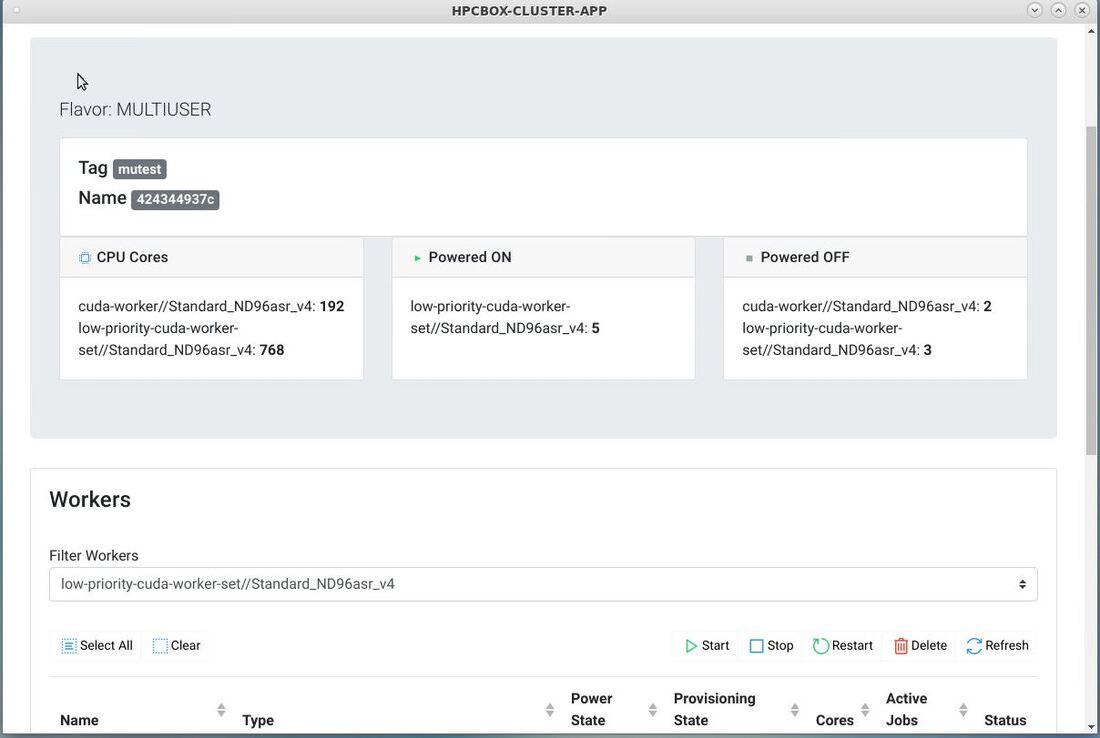

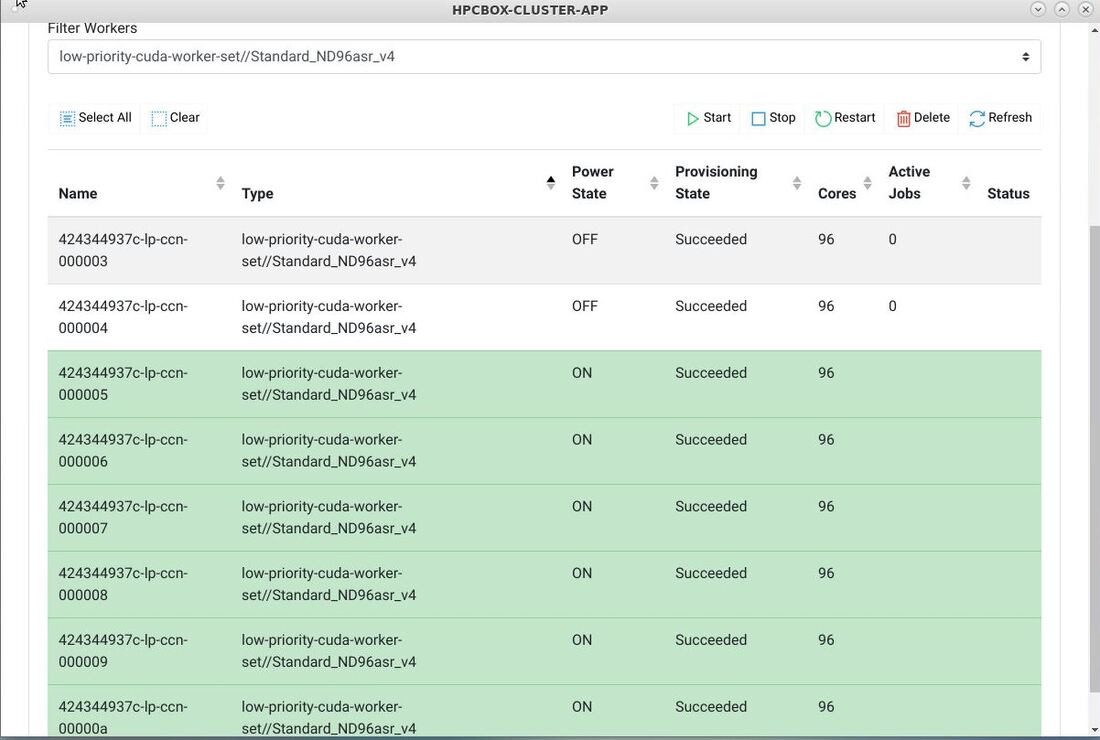

In this screenshot we see multiple NDv4 instances attached to the HPCBOX cluster and ready for use.

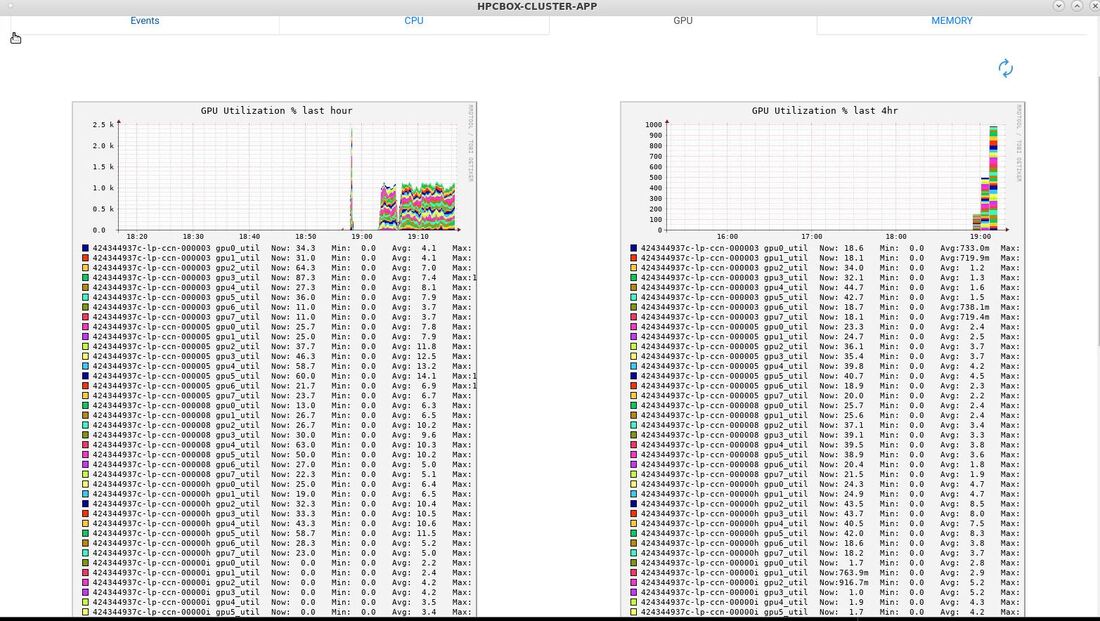

In the screenshot below, you can see some of the HPCBOX Monitoring charts showing GPU utilization (32 GPUs in this case).

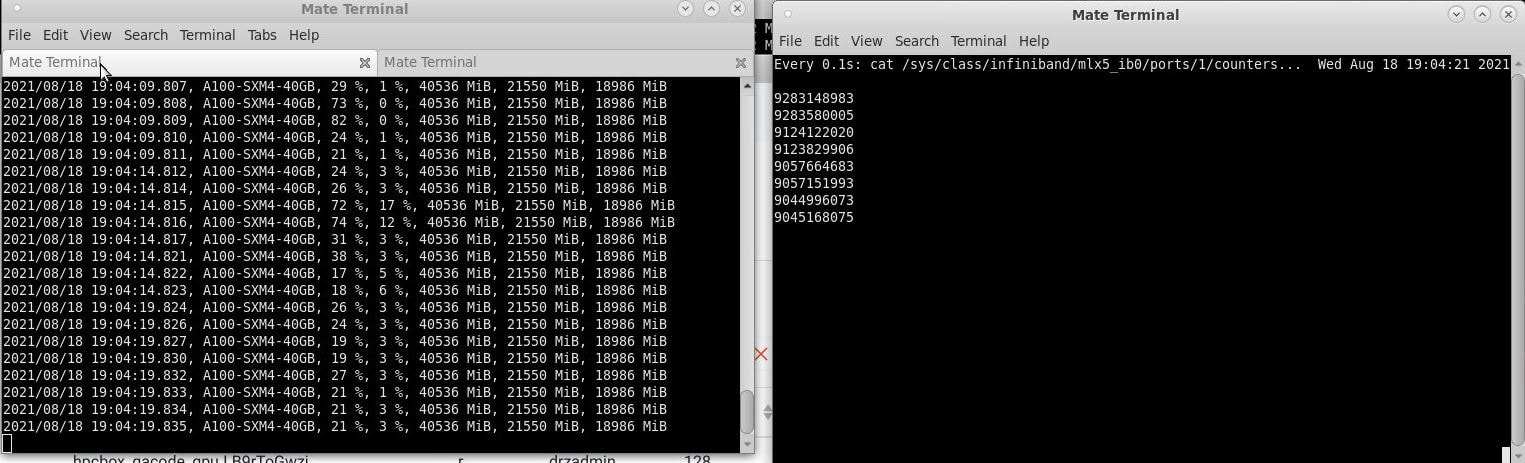

.The output below shows a device-device bandwidth test across two nodes over InfiniBand.

In the screenshot below, you can see a snapshot of the IB links and the GPU utilization from a four node test run.

HPCBOX AutoScaler to optimize budget spend

We recently announced support for low-priority spot priced instances in HPCBOX AutoScaler. Combining the NDv4 instances with the HPCBOX AutoScaler is a nice way to optimize your budget spend when running non-critical jobs where you can afford to have the job rescheduled and restarted when nodes get pre-empted. The nice thing about HPCBOX is that one can have both standard and low-priority instances on the same cluster and users can target different class of machines based on the importance of the jobs that they are submitting.

More to come

This post highlights how easy it is to access and use an extreme scale HPC cluster with the fully interactive user experience delivered by HPCBOX. Furthermore, with the personalized HPC support delivered by Drizti, HPC is way easier to use effectively than what it used to be and that is why we call this Personal Supercomputing!

Over time, we will be performing further tests, analysis, optimizations and try different applications on the NDv4s. Hopefully, we will be able to share some of those experiences in a future post. Availability

Get in touch with us to use HPCBOX and accelerate your innovation with extreme scale Personal Supercomputing!

Contact Us

Author

Dev S. Founder and CTO, Drizti Inc All third-party product and company names are trademarks or registered trademarks of their respective holders. Use of them does not imply any affiliation or endorsement by them. |

RSS Feed

RSS Feed